1. Improving Alexa's voice commands

The future of home

If Disneyland’s “Home of the Future” were updated from the 1960s to today, home automation would surely be the centerpiece. Among the hordes of competing vendors vying to get their “smart” devices into every room, homes are looking pretty futuristic already. But the fulcrum of the home automation universe, the virtual assistant, just isn’t there yet.

When I got an Amazon Echo Dot last fall, it took a long time for Alexa to understand me. Though at first its speech recognition software failed to filter out sirens from the fire station across the street, the software has been improved so much on the back end that Alexa’s “sorry, I didn’t understand that” response is increasingly rare. I witnessed personally the algorithm’s improvement in recognizing stutters; Alexa no longer mocks me by saying, “I didn’t find a device or group called “the the living living living room lights” on Ruthie’s account.”

Personification

Amazon deliberately gave Alexa a specific female voice and name, convincingly personifying what is actually a cluster of servers in Amazon’s warehouses. Even writing this piece, I struggle to call Alexa “it” and not “she.” When I ask Alexa to set a timer and she responds, “OK,” I frequently say “thank you” even though the device can’t hear me unless I preface the command with “Alexa.” This personification is deliberate: Amazon wants users to trust its AI and make them feel as comfortable as possible.

The illusion that Alexa is a person, though, gets totally obliterated when “she” doesn’t understand a command. Its canned response is, “sorry, I’m not sure,” or “hm, I’m not sure what you meant by that question.” Not only does the repetition of the responses break the illusion, the phrases themselves are not humanlike at all. “Turn on the lights” is a simple instruction that any human should understand with minimal room for error. Alexa stumbles hard.

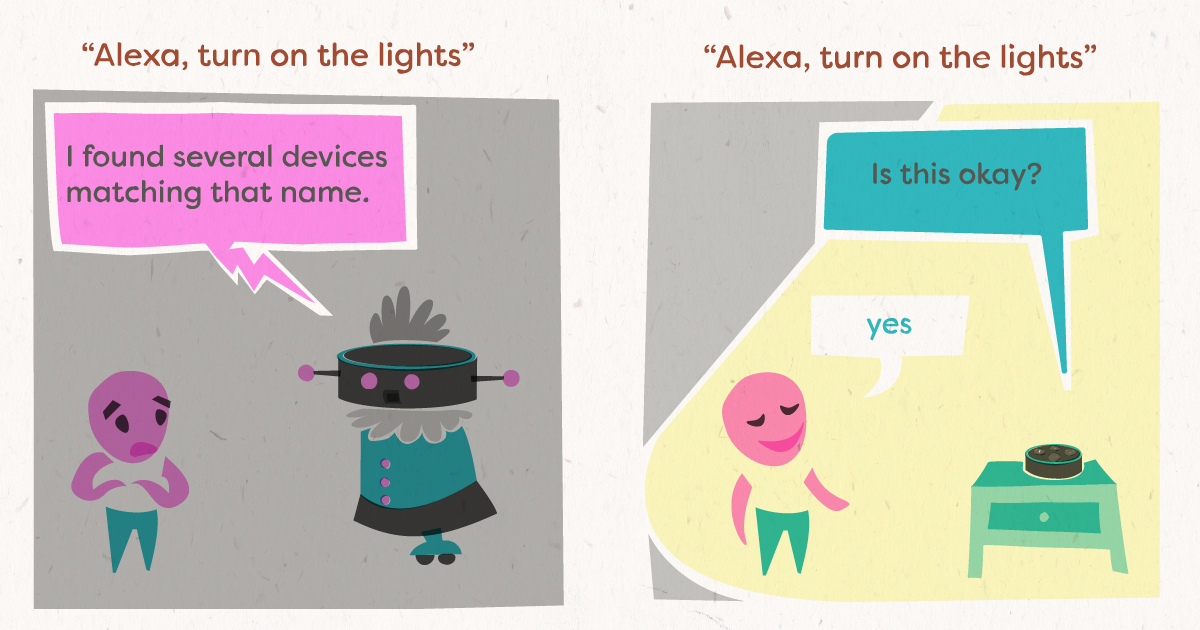

Part of the confusion in Alexa’s programming is that pronouns often don’t carry over to subsequent commands. For example, my saying “Alexa, turn on the lights” commands the response, “I found several devices matching that name. Which one did you want?” By this point, turning on the lights myself would take less time already. My answer is “all of them.” Then Alexa delivers this clunker of a line: “Sorry, I couldn’t find a device or group named ‘all of them’ in Ruthie’s account.”

Tips for becoming more human

1. Temporarily store pronouns for subsequent commands

2. Execute a chain of tasks with compound commands

3. Make educated guesses and learn from mistakes

The first change I’d make to Alexa’s algorithms is to carry over pronouns to secondary commands. That’s pretty straightforward. The second upgrade would be the ability to string multiple commands together (“Alexa, turn on the lights and dim them to 50%” resolves only the first command). The sounds-simple-but-is-totally-not-simple answer to the rest of my frustrations is to have Alexa make educated guesses. A human assistant wouldn’t balk at a simple command like turning on the lights; he or she would simply turn on the nearest lightswitch and ask, “is this okay?” What if Alexa behaved the same way? It would cut out the frustration of prompting multiple commands and opens a channel for AI learning so it can adapt to a user’s preferences more personally next time. The confirmation question “is this okay?” still feels polite and even apologetic. Easier yet, it requires only a “yes” or “no” answer from the user. Of course computers were never programmed to guess -- that goes against everything computers were ever built for -- but they’ve got to at least try if they want to take over the world.